Last month, Unilever CMO Keith Weed addressed the IAB Annual leadership conference and urged brands to work with digital platforms to ensure messaging happens in a "brand-suitable” environment.

And with 2017 being the first year that digital ad spending collectively surpassed TV, brands need more innovative, adaptive solutions to surface safe spaces in order to best align their messaging within a changing digital video ecosystem.

Here’s just a quick summary of some of the brand safety concerns from marketers in recent months:

- November 2017 – A Teads survey of 104 CMOs and Senior Executives at US brands found that more than three quarters (78%) of marketing heads say they have become more concerned about brand safety in the last 12 months

- December 2017 – A survey of 30 brand marketers by Digiday showed that brands place more responsibility on themselves than on agencies, vendors or publishers, when it comes to maintaining brand safety.

- January 2018 – Logan Paul posts video the now-infamous “Suicide Forest” on YouTube, sparking outrage from pretty much everyone, consumers, viewers, advertisers, you name it.

- February 2018 – Logan Paul is suspended and later removed from YouTube’s monetization program. YouTube issues new monetization rules and guidelines for channels who create content that would be deemed brand unsafe.

But despite calls from the industry, YouTube’s top accounts still are littered with unsafe or unsavory content. Marketers are trading scale for safety, and it’s time to put technology to use to meet the needs of a changing video advertising ecosystem.

Thanks to innovations in computer vision and AI, detection of content and themes inside of videos is now possible to scale in a way never seen before.

Delmondo recently used Uru’s Brand Safety API to automatically look inside the video and audio content of recent uploads on some of YouTube’s top influencer channels as part of a new, in-depth report - you can request the full report here or read on for some of the key findings.

Methodology

We analyzed the 25 most recent videos from the 17 top influencers on Youtube, using SocialBlade’s ranking of global YouTube channels by subscribers, and disregarding those that belong to mainstream music artists.

Uru uses a combination of computer vision, natural language processing, and other artificial intelligence to generate its proprietary video brand safety scores. Specifically, they use these tools to scour the video’s audio and visual data, looking for a long list of brand safety red flags. Each of these red flags is assigned a particular weight based on our in-depth field research with brands, learning the types of content they wish to avoid sponsoring and/or being associated with.

Those key indicators include:

- Unsafe objects and themes such as weapons, drugs, terrorism, and celebrity scandals;

- Unsafe language such as profanities, misogyny, and hate speech;

- Paid or sponsored content;

- Negative sentiment;

- Not safe for work material (nudity and extreme violence).

Every inputted video starts with a brand safety score of 1, meaning that it's totally safe video, but for each occurrence of a red flag that Uru finds, that video is penalized based on the weight assigned to that red flag. The lowest brand safety score possible is 0, meaning that it's completely unsafe.

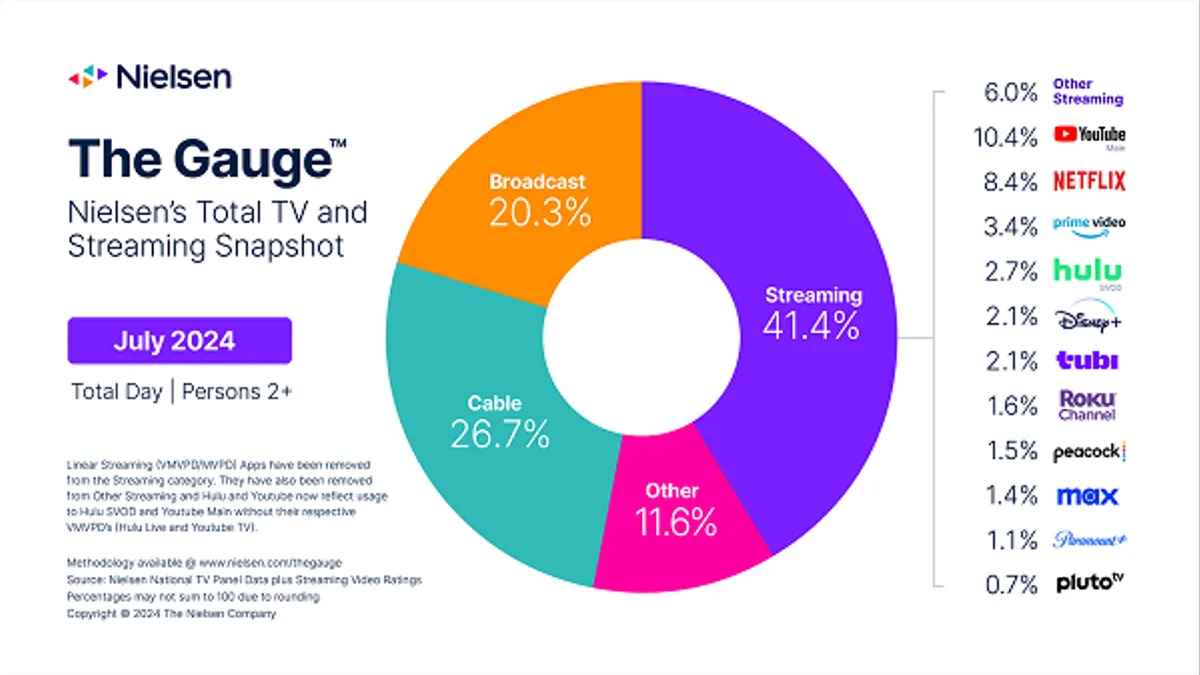

Here are some of the key findings:

1. Surprisingly, despite 2017’s numerous brand safety scandals, YouTube’s most popular influencer channels still contain a lot of content that would be deemed brand-unsafe. Based on our analysis, content on these channels is still more likely to be brand-safe than brand-unsafe, but only by a small margin.

2. YouTubers use a lot of bad language. More specifically, YouTube’s most popular channels still contain unsafe language (such as profanities, misogyny, and hate speech), negative sentiment, and unsafe objects and themes (such as firearms and graphic violence).

Of the videos we examined:

- 67% contained unsafe language

- 61% contained negative sentiment

- 16% referenced or showed firearms

Even more, a surprising amount (higher than 15% on one popular channel) contained graphic violence (usually from gory video game footage).

3. Engagement thrives in brand-safe environments. Audiences clearly care about brand safety, and that’s reflected in the data - while you might assume that divisive speech or unsafe themes might be more adept at sparking conversation, it’s clear that the YouTube audience doesn’t respond well to this, and that the creators who publish such content ultimately receive fewer views and engagement.

Of the videos we examined:

Videos from creators with Brand Safety Scores greater than .7 generated:

- 38% higher average views per video

- 73% higher average engagements per video

Videos from creators with Brand Safety Scores less than .7

- 26% fewer average views per video

- 51% fewer average engagements per video

You can see that creators who are known for more unsafe content and/or language in their videos tend to have overall less engagement than those creators who are known for more “safe” material.

4. Computer vision, machine learning and artificial intelligence working together with one another will become an important process for scalable detection of brand safety in YouTube videos, in near real-time, in order to help advertisers monitor the influencers they sponsor and also help them decide which content on the platform is safe for them to target with marketing and ads.

Brand safety's not just important for brand reputation and awareness, it’s actually crucial, because all brands are becoming direct-to-consumer brands. Without trusted relationships with consumers, those consumers won’t be as willing to part with their data, so both sellers and buyers need to work in concert to maintain this balance.

We've also summarized some of the key findings into the below infographic - again, you can get access to the full report by getting in touch.